The fine-tuned model Llama Chat leverages publicly available instruction datasets and over 1 million human annotations Llama 2 models are trained on. Clone on GitHub Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 70B fine-tuned model optimized for. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. ..

LLaMA-65B and 70B performs optimally when paired with a GPU that has a minimum of 40GB VRAM. A cpu at 45ts for example will probably not run 70b at 1ts More than 48GB VRAM will be needed for 32k context as 16k is the maximum that fits in 2x. 381 tokens per second - llama-2-13b-chatggmlv3q8_0bin CPU only. Opt for a machine with a high-end GPU like NVIDIAs latest RTX 3090 or RTX 4090 or dual GPU setup to accommodate the. This blog post explores the deployment of the LLaMa 2 70B model on a GPU to create a Question-Answering QA system..

LLaMA-65B and 70B performs optimally when paired with a GPU that has a. Ago I tried out a q6 of L2-70b Base GGML The hardware is a Ryzen 3600 64gb of DDR4 3600mhz. Llama 2 is broadly available to developers and licensees through a variety of hosting providers and on the Meta website. The performance of an Llama-2 model depends heavily on the hardware its running on. Using llamacpp llama-2-70b-chat converted to fp16 no quantisation works with 4 A100 40GBs all layers offloaded fails with three or..

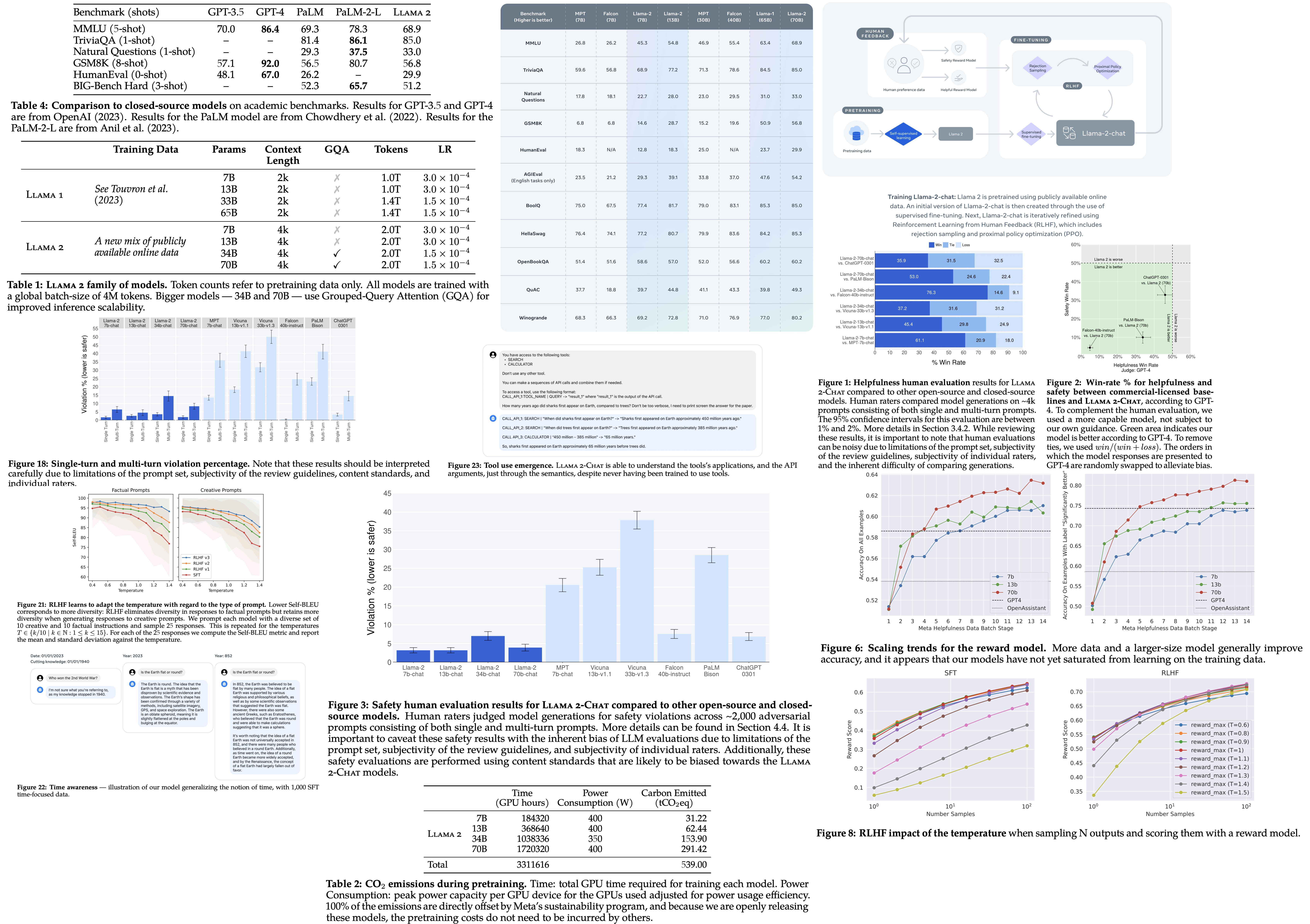

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. Jose Nicholas Francisco Published on 082323 Updated on 101123 Llama 1 vs Metas Genius Breakthrough in AI Architecture Research Paper Breakdown First. 6 min read Oct 8 2023 Llama 2 is a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. In this work we develop and release Llama 2 a family of pretrained and fine-tuned LLMs Llama 2 and Llama 2-Chat at scales up to 70B parameters On the series of helpfulness and safety..

Komentar